A Web crawler starts with a list of URLs to visit, called the seeds. As the crawler visits these URLs, it identifies all the hyperlinks in the page and adds them to the list of URLs to visit, called the crawl frontier. URLs from the frontier are recursively visited according to a set of policies. If the crawler is performing archiving of websites it copies and saves the information as it goes. The archives are usually stored in such a way they can be viewed, read and navigated as they were on the live web, but are preserved as “snapshots”.

The archive is known as the repository and is designed to store and manage the collection of web pages. The repository only stores HTML pages and these pages are stored as distinct files.

Everything has been taken care of our team!

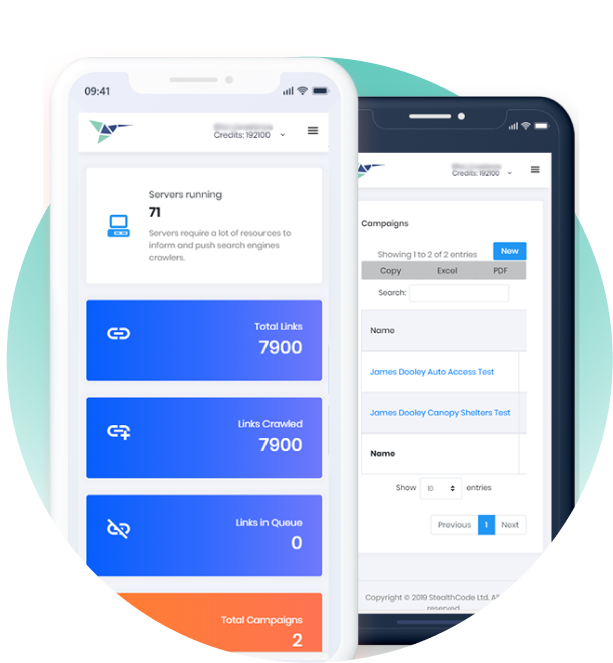

Colinkri uses a private server network; so no proxies required.

Colinkri is a Web-Based Application which allows you to use it on any web browser.

100's of PVA Gmail accounts are included in Colinkri totally free of charge.